Introduction

- Cache is a data structure whose purpose is to store an object for defined period.

- This defined period logic can be based of frequency of use of the object or last time of access of the object or any other combination.

- Generally when we talk about cache , LRU and LFU is common eviction algorithms that comes to our mind.

- In this blog we will focus on how to implement LRU ( Least Recently Used ) eviction Policy in Java

Least Recently Used (LRU)

- LRU cache works on timing of the access of the element. If some data is stored recently then it would be last element to be evicted from cache.

- For example, consider below diagram. Our cache capacity is of 5 nodes.

We add Node 1 , then Node 2, then Node 3 and then Node 4. - The we access Node 3 , before this operation latest element was 4 , but since we have read Node 3 , it will become latest element in our cache.

- Next , Let’s add Node 5 that will fully fill capacity of our cache. So if we add more element then cache eviction will happen to accommodate new node.

- Let’s add Node 6, now it overshoot the capacity of the cache hence we will evict least recently used Node , i.e Node 1 in our case , so we will remove it and add Node 6 to our cache.

Using HashMap & doubly LinkedList

- We will use HashMap to store Key of the Node and Node itself as value. Since we have Node itself at O(1) access , then we can remove any node at O(1) time.

- But in order to remove any Node at O(1) time we will maintain Doubly Linked List.

- So with the help of HashMap and Doubly LinkedList , we can access any Node in O(1) time and maintain its relevancy/order by using Doubly Linked List.

- Below is the example of get operation for node 2. Lets say that we need to access node 2 , so first we will check in HashMap if the node 2 exist, if it does we get the node for the key 2 and get its next and prev pointers .

- We use Next and Prev pointers to manipulate its location by removing pointing to Node 2 and Pointing to next of Node 2 and Prev of Node 2.

- After removal of Node 2 we can append Node 2 at the end of LinkedList so that latest element resides at the tail and least used element resides at the front.

- We can use Front and Rear end either way, I mean we can keep Front as latest and Rear as least accessed element.

- Below is the Java Implementation of LRU Cache with Doubly Linked List and HashMap.

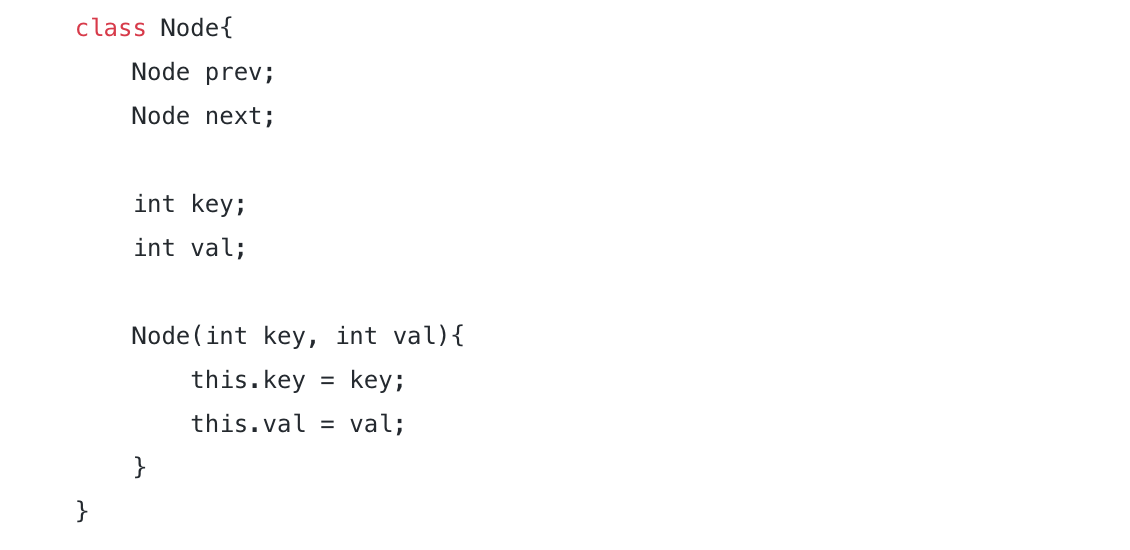

- First we will define our Node , it consist of prev node , next node , key and value.

- After this lets do couple of initialization. We defined head and tail node.

We also require cap to store the allowed capacity of cache.

We initialize HashMap to store Key and its Node for O(1) lookup access. - After this , we define our constructor that takes capacity as the parameter and initialize necessary variables .

- We point head to the tail , now our doubly linked list doesn’t contain any Node apart from head and tail

Get Operation

- Get operation first checks if the element exist in the HashMap, if it does then we can get the Node and the remove it from the current position in the linked list and add to the head of it since head represent latest in the order and tail represent least accessed element.

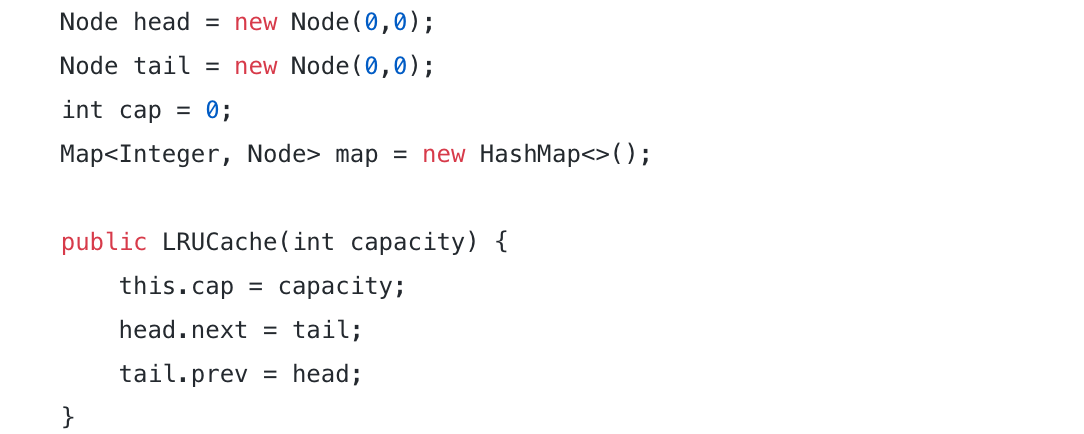

- We have to implement logic for remove and insert operation as defined below.

- Insert operation is basically adding the node to the hashmap and then adding to the head of the doubly linked list.

- While remove operation ,removes the key from hashmap and then rearrange the pointers so that it doesn’t point to the node we are planning to remove.

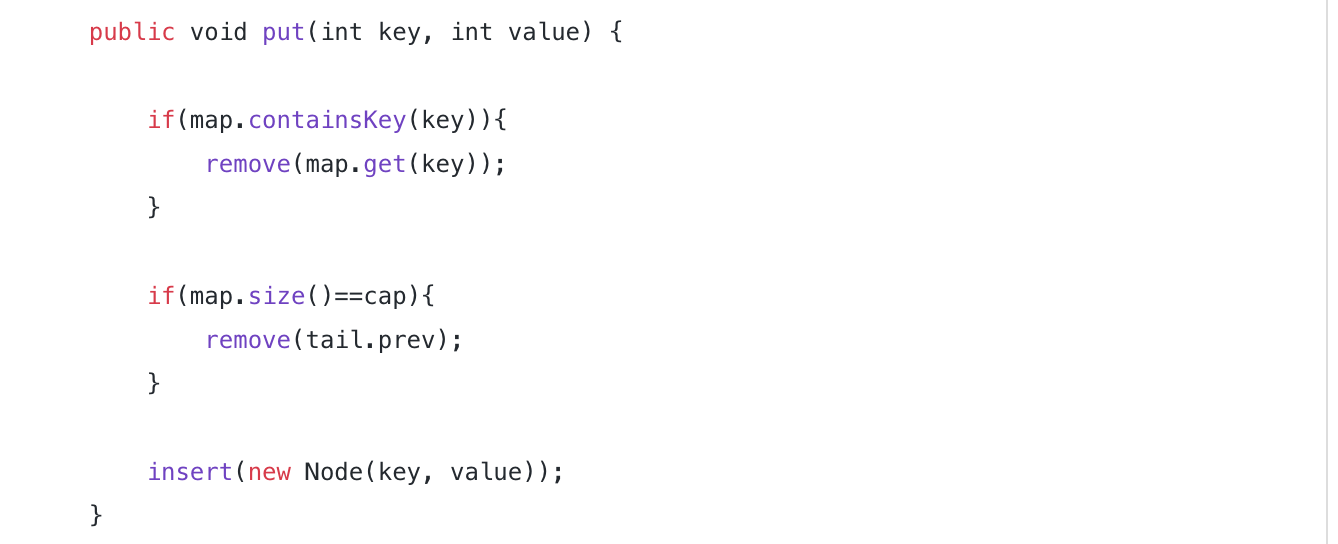

Put Operation

- Now we will implement Put operation. Put operation checks if hashmap contains the key , then we need to remove that node. since we will insert it to the front/head of the linked list which represents the lastest element.

- We will also check if the size of the cache is exceeding compare what was assigned initially , if that is the case then we have to remove the least accessed node from our doubly linked-list , which is tail of our linked list.

- Below is the entire class that includes get and put operation. please note that both the operation takes O(1) time complexity to access the element.

class LRUCache {

class Node{

Node prev;

Node next;

int key;

int val;

Node(int key, int val){

this.key = key;

this.val = val;

}

}

Node head = new Node(0,0);

Node tail = new Node(0,0);

int cap = 0;

Map<Integer, Node> map = new HashMap<>();

public LRUCache(int capacity) {

this.cap = capacity;

head.next = tail;

tail.prev = head;

}

public int get(int key) {

if(map.containsKey(key)){

Node node = map.get(key);

remove(node);

insert(node);

return node.val;

}

return -1;

}

public void insert(Node node){

map.put(node.key, node);

Node headNext = head.next;

node.prev = head;

head.next = node;

node.next = headNext;

headNext.prev = node;

}

public void remove(Node node){

map.remove(node.key);

node.prev.next = node.next;

node.next.prev = node.prev;

}

public void put(int key, int value) {

if(map.containsKey(key)){

remove(map.get(key));

}

if(map.size()==cap){

remove(tail.prev);

}

insert(new Node(key, value));

}

}

- If we use Singly Linked List then our time complexity might shoot to O(N), because to perform get operation , we might have search the element inside our linked-list by iterating it and then rearrange the pointers and add the removed operation at the front of the linked-list.

- We are removing this O(N) search by just adding prev location to each node so that we can effectively rearrange the location in just O(1) time.

Conclusion

- In this blog we learn about LRU cache . We also implemented it using Java HashMap and Doubly LinkedList.

- LRU cache is very popular strategy that is used in Many applications. CDN often use LRU Cache to store static content at their edge servers to speed up the loading of the static files.

Bonus Tip

- If you want to upskill your coding interview game, you can definitely check out this bestseller course ( this is in Java )