Introduction

- We often need to perform data movement tasks within cloud storage. For example, we store data in a temp storage and then would like to move it to another folder that is dedicated to long-term storage and delete objects from the previous location.

- This is a very common task that every organization needs to perform on regular basis.

- On-prem often has batch infra where we write cron jobs that get executes on a particular time at a defined interval. We often have to manage those batch infrastructure and need to manage those cron jobs.

- In Cloud, there has to be a better way to and there is some option which totally takes care of managing infra and we only pay when our system gets executed. We can build data movement jobs in a Serverless way, where we don’t manage infra at all and only focus on business logic

- In Google cloud, we have two options to move GCS objects at a frequent interval in a Serverless way. We will use serverless products such as cloud-run cloud workflow to achieve our goal. Let’s get started.

Option 1 : Cloud Workflow + GCS Rest API

- In this option, we basically use a cloud workflow product, which serverless workflow management system.

- We can interact with various google cloud service through their api and service account.

- Google cloud storage provides REST API to interact with it, we will use cloud storage: https://cloud.google.com/storage/docs/copying-renaming-moving-objects

- We first have to copy the object and then delete the previous object all with a REST API call.

Cloud WorkFlow Configuration Yaml File

– moveGCSObject: It copies input file from source to destination

– checkSuccessfulCopy: It checks the result of the copy if it was successful. When gcs input file is large the copy is done in chunks hence we should check if response. body. done is true or not, if not then we have to continue making a subsequent request to the MoveGCSObject method and pass the token that we received from the first request.

– deleteOriginalObject: After the copy is successful, we can delete original objecthttps://asyncq.com/media/32019564cd97bc3aa0eca1726b4d188d

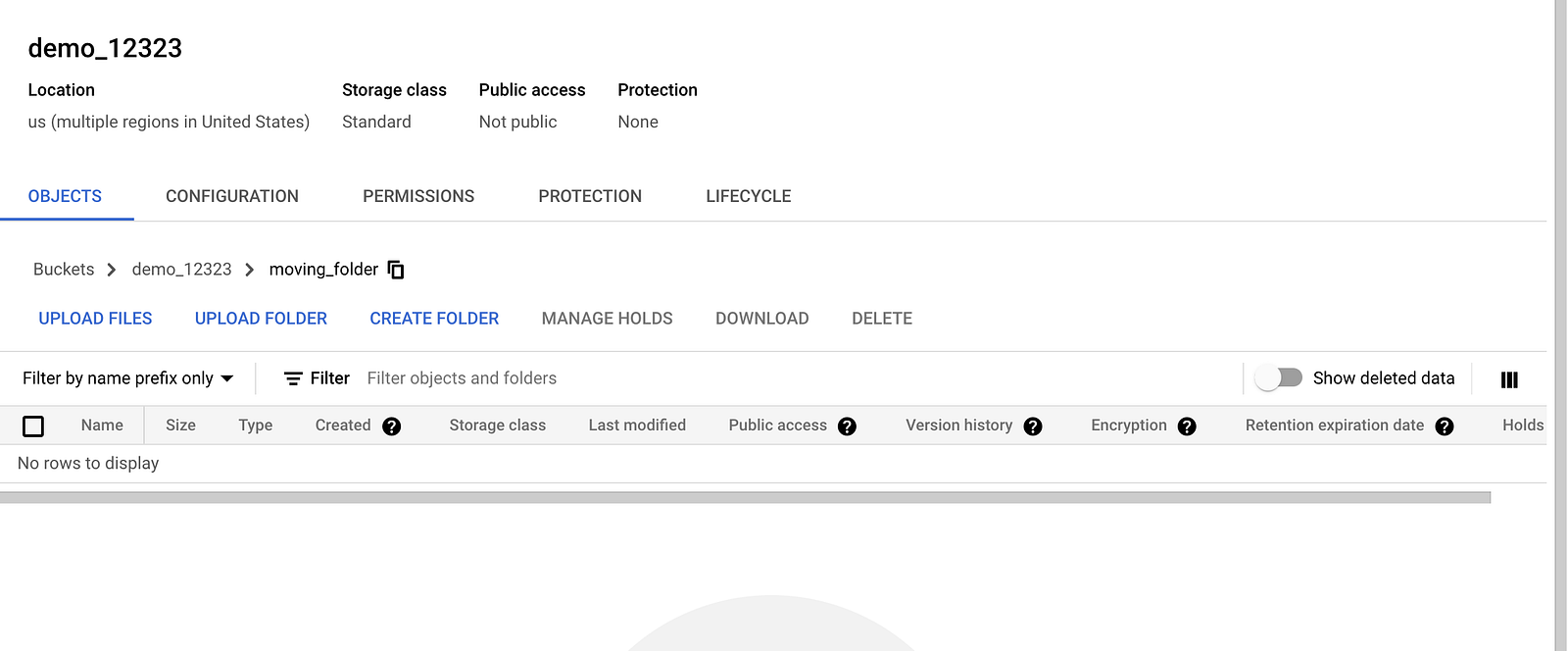

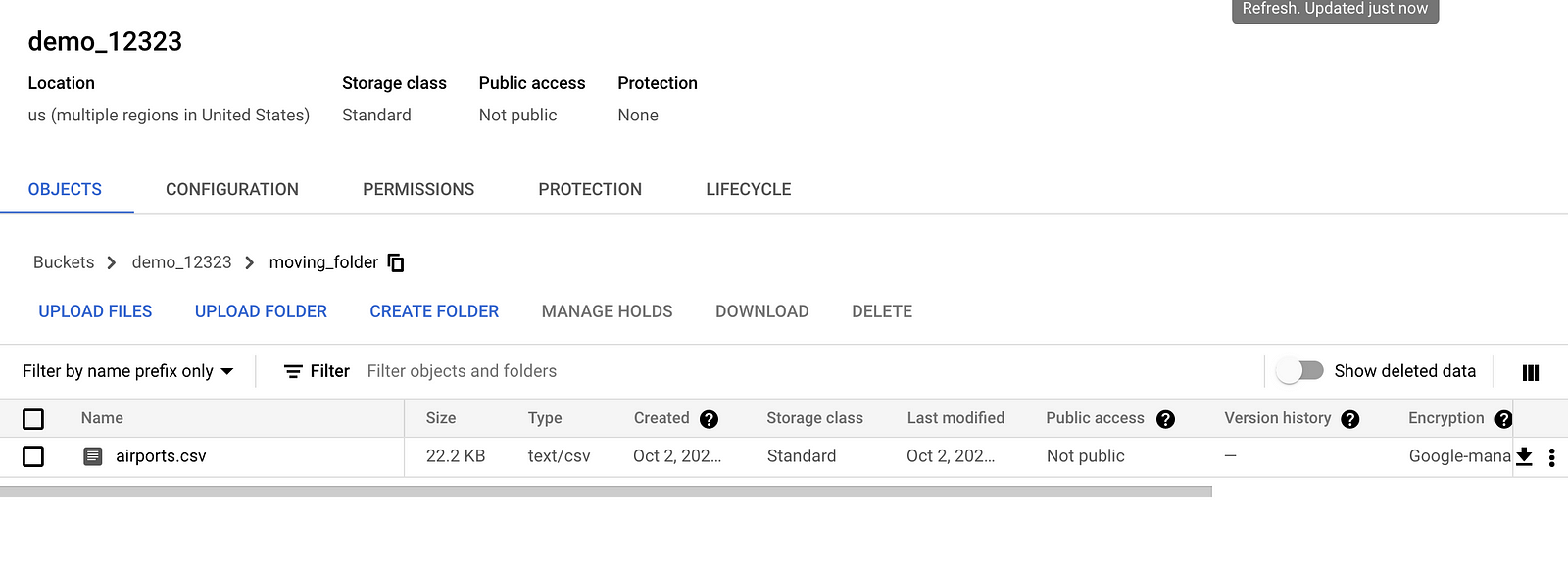

- We have input file demo_12323 bucket as airports.csv file, we will move this file to another folder called moving_folder.

- Our first workflow task copy the object to the destination and second task deletes the previous object.

- We can confirm logs and response in cloud workflow console as well as cloud logging.

- Our file is present in the moving_folder

- While the input file has been deleted successfully.

Option 2: Cloud Workflow + [Cloud Run+gsutil]

- In this option, we will use gsutil tool from google cloud to move objects from source to destination.

$ gsutil mv $SOURCE_OBJECT $DESTINATION_OBJECT

- Since we need to run file movement jobs regularly in a serverless way, we need to package gsutil command as dockerfile and run with cloud run service.

- Cloud run allows executing shell file: https://cloud.google.com/run/docs/quickstarts/build-and-deploy/shell

- So basically we have a cloud run instance that will have the responsibility to execute shell file whenever we make the request.

- Once we have cloud run service running we can invoke it either through cloud scheduler https://cloud.google.com/run/docs/triggering/using-scheduler

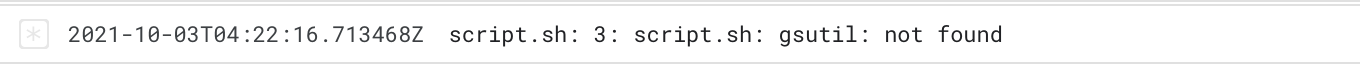

- Our shell command will just contain gsutil move command.

Make sure to add the cloud SDK to dockerfile while building the image so that gsutil command will be available when we execute inside the cloud run

RUN echo "deb [signed-by=/usr/share/keyrings/cloud.google.gpg] http://packages.cloud.google.com/apt cloud-sdk main" | tee -a /etc/apt/sources.list.d/google-cloud-sdk.list && curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key --keyring /usr/share/keyrings/cloud.google.gpg add - && apt-get update -y && apt-get install google-cloud-sdk -y

- Additionally please provide permission to the

xxxxxxxx-compute@developer.gserviceaccount.com as cloud storage admin or some other lesser permission otherwise will we get access denied exception

Some Other Consideration

- One of the curious questions would be can’t we use cloud functions to move gcs files? As long as executing gsutil as shell command it is not possible since cloud run doesn’t allow executing shell file. But yes we can build something programmatically( Java, python, javascript) using GCS rest API.

- Cloud Composer( Apache Airflow ) can also perform the job easily but it is not a serverless option and we might have to manage it to some extent.

Conclusion

- With the help of various serverless products like cloud workflow or cloud run, we can run cloud storage object movement jobs easily in a serverless fashion.

- Such a job can also be triggered easily through a cloud scheduler.