Introduction

In the era of cloud computing, Serverless has become a buzzword that we keep hearing about, And eventually, we get convinced that serverless is the way to go for companies of all sizes because of various advantages. The basic advantages of the Serverless approach are :

- No Server Management

- Scalability

- Pay as you go

In this article, we will also explore how we can use the Serverless approach to build our data Ingestion pipeline in Google Cloud.

Serverless Offerings In GCP

GCP offers plenty of Serverless Services in various areas such as mentioned below.

- Computing: Cloud Run, Cloud Function, App Engine

- Data warehouse: Google BigQuery

- Object Storage: Google Cloud Storage

- Workflow management: Cloud Workflow

- Scheduler: Cloud Scheduler

Technically, the combination of the above tools is enough to build API data ingestion in GCP.

We can build Two patterns to ingest API data in Google BigQuery

Business Requirement

Let’s consider you have a business requirement that you want to ingest/scrape data from various online resources and get the top headlines for every day. These headlines will be ingested to Google BigQuery and Every 1 day a scheduled query will be executed on BigQuery to calculate which media outlet published most headlines for a given day.

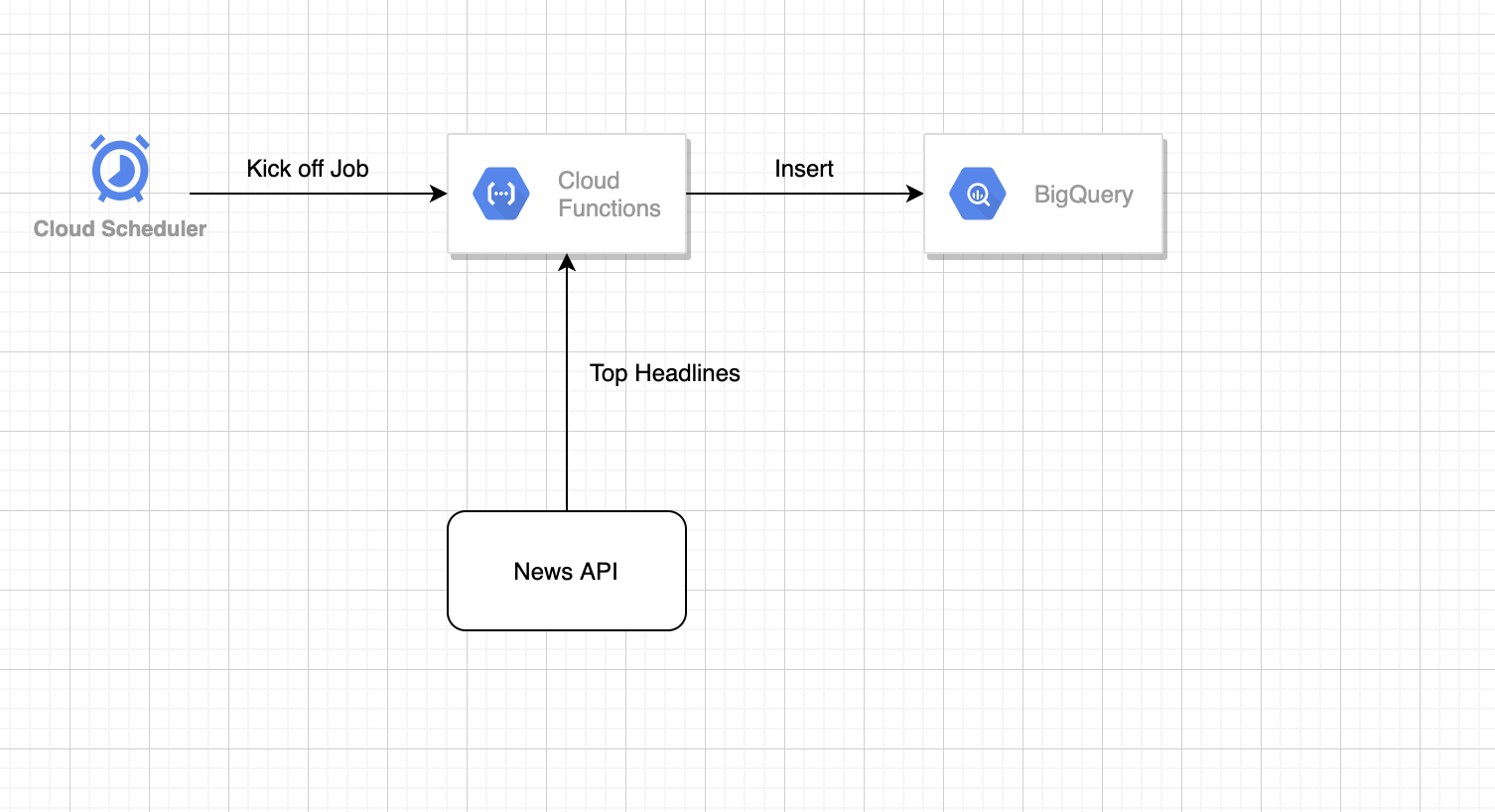

Pattern 1

Technical Design

Now we can build this data ingestion pipeline in many ways. But if you really decided to go serverless below data pipeline might be a good approach.

- Cloud function would be a good solution to write an API request and response handling code and we can also use Google BigQuery Streaming Insert API to write each news headline into BigQuery.

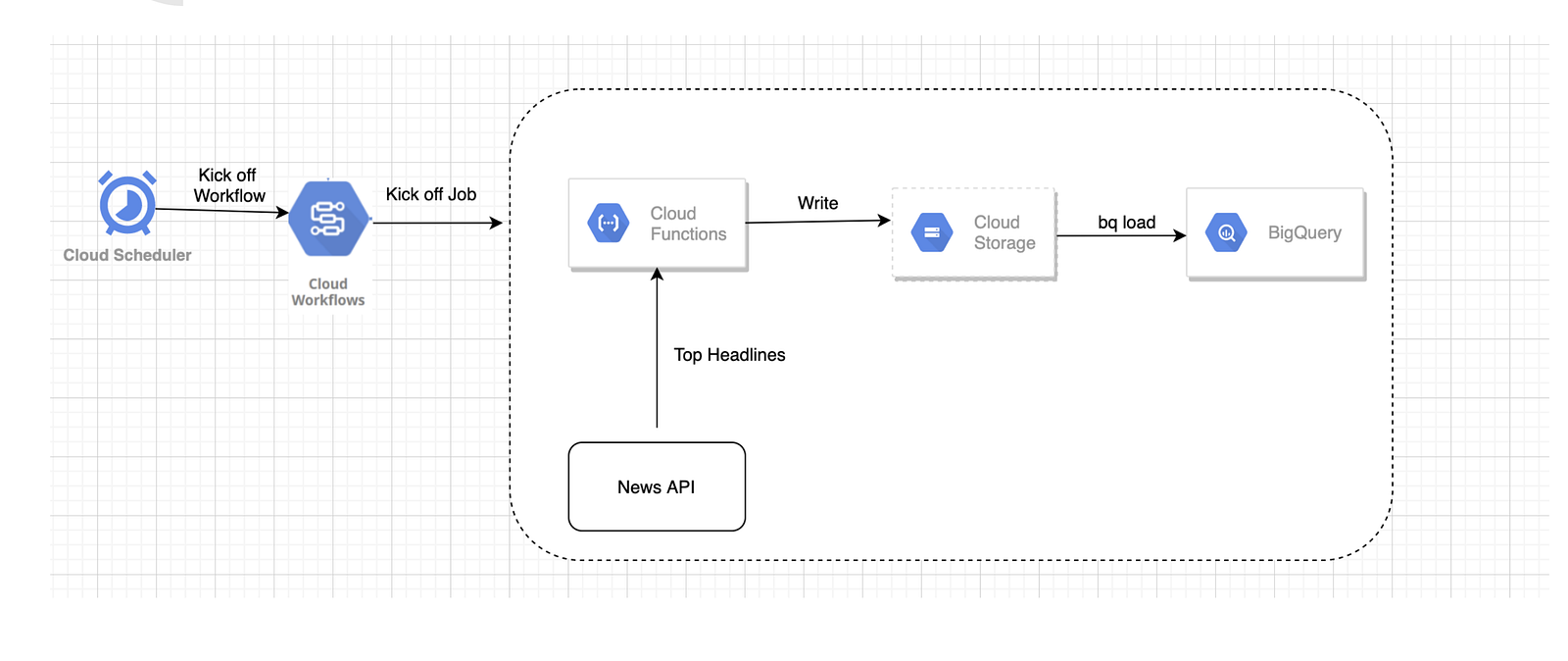

Pattern 2

Technical Design

In this approach, we are basically breaking down the ingestion and insert task as separate steps. API request and response handling code will be written in Cloud function and all the news headlines record can be written into the GCS file instead of writing to BigQuery via Insert API. We would use the BigQuery Batch load command to load all the data from the GCS file into BigQuery.

We will orchestrate data ingestion and data load steps using cloud workflow which is another serverless offering in GCP.

Note: Streaming Insert to BigQuery mentioned in pattern 1 would cost an additional charge, while batch load data to BigQuery is completely free.

Part 2 of this blog where I share about code and configurations for the above-mentioned pattern.

Happy Data Pipeline Building! ✌️